Adrian Krebs,Co-Founder & CEO of Kadoa

Adrian Krebs,Co-Founder & CEO of KadoaI just came back form one of the big alt data conferences, where I had many discussions about how the alt data landscape is evolving in the age of AI.

Alternative data means any non-traditional datasets used for investment insights. The term originally referred to any data that didn’t come from exchanges. The first "alt data" assets were credit card transaction data and web scraped datasets, but it’s since grown so broad that it basically just means "data".

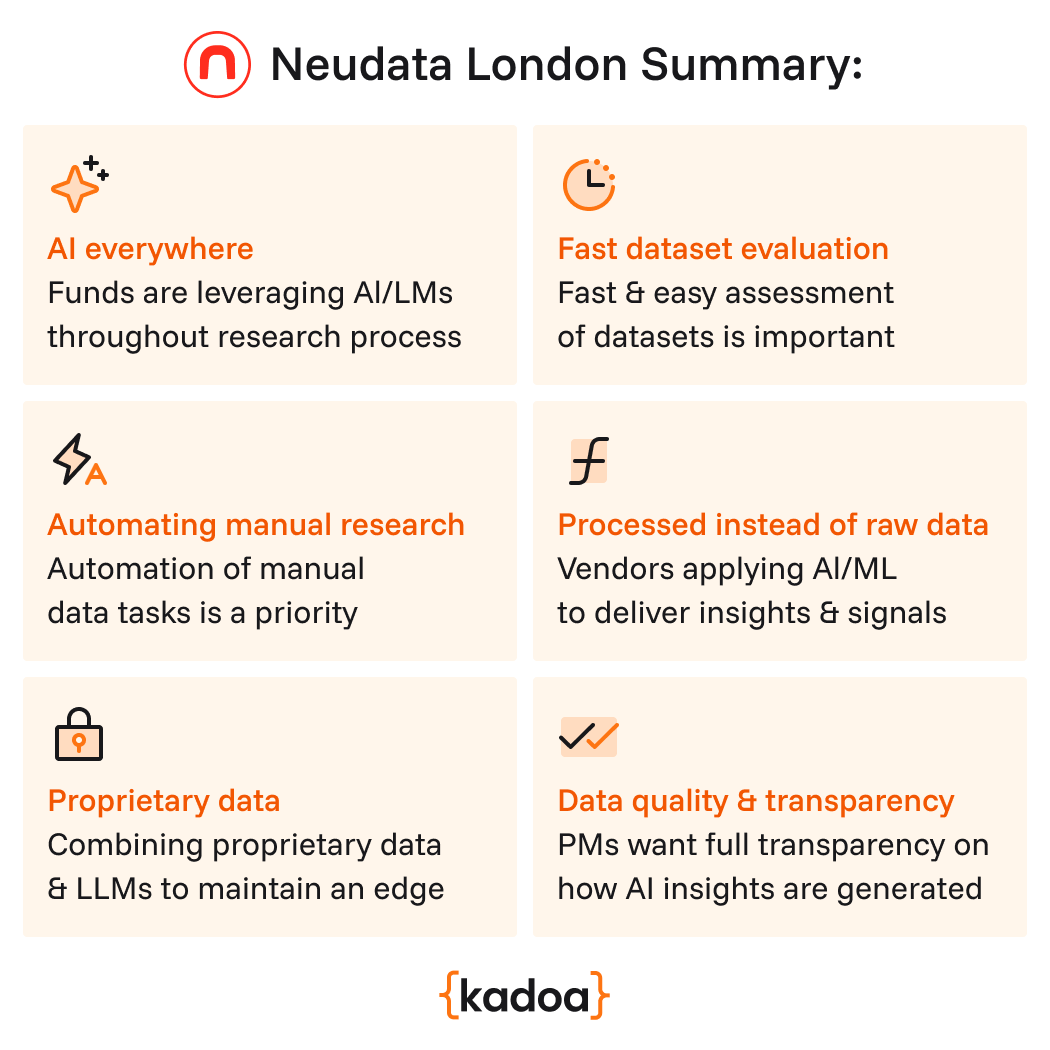

Here’s what's top of mind in the industry right now:

Investment firms have been doing Machine Learning before it was sexy and traditional ML is still way more important than LLMs in alpha generation. However, LLMs are getting better than traditional NLP approaches for many text-related tasks such as classification, so companies are starting to leverage AI for processing old dataset and make them more valuable.

Generally, funds are actively integrating AI across their workflows. We heard how firms that are piloting AI across their entire research process – from data extraction and summarization to data visualization. Others mentioned building proprietary internal tools (like variations of 'Firm-GPT') specifically for tasks like Q&A on internal documents, advanced sentiment analysis, or complex entity resolution.

A recurring pain point is the sheer amount of time highly skilled analysts spend on low-value, manual data tasks. Copying and pasting information from websites, filings, or disparate sources into spreadsheets is a significant time sink and has been a limiting factor for sell-side and buy-side analysts. There's a strong push towards automating data sourcing and centralizing data access within firms to free up analysts for higher-value work. The goal is to have data accessible in a central place so all teams can use it. Ultimately this will help analysts to get a faster, broader and more digestible view on a company, sector, or industry.

Research analysts are consuming so much data, and they are mostly doing it by hand.

With a growing universe of potential datasets, data sourcing and quant teams need to efficiently evaluate if a new dataset holds potential uncorrelated alpha. Data vendors should make trial periods and data testing as frictionless as possible. The faster a potential buyer can confidently reach a "yes" or "no" decision on a dataset's value, the better it is for everyone involved. Some funds are buying the data the next day if they see it working in their tests.

The data provider landscape is evolving beyond just providing raw data feeds. In the past, NLP was a huge resource bottleneck and that barrier is coming down rapidly thanks to LLMs. Vendors are increasingly moving up the value chain by applying their own AI/ML models to generate signals on top of their datasets. We're likely going to see more "signal-as-a-service" instead of just raw off the shelf data.

Expect more signal-as-a-service instead of just raw data.

As basic financial and widely available alternative datasets become more commoditized, proprietary insights from unstructured sources become more relevant. Premium value is placed on proprietary or hard-to-acquire data sources, for example specialized B2B transaction data, niche web scrapes targeting specific industries, or insights derived from expert network calls. It's not just about having that unique data, it's also about how to then process it. Connecting proprietary AI capabilities to these unique data streams is seen as key to maintaining an advantage in the market.

We've achieved peak data and there'll be no more

Amidst the excitement around AI and new data types, fundamental requirements haven't changed. Poor data quality remains the number one reason why dataset trial fail. Access to clean, reliable, point-in-time data is non-negotiable for data buyers, especially for rigorous backtesting of strategies.

As AI-driven insights become more integrated into investment processes, portfolio managers and compliance teams want to have full transparency. They need to understand where data data comes from and the methodology behind how AI models generate insights from it.

AI is definitely changing the alternative data landscape towards more automation and processed signals. Information is every fund's competitive edge and has been limited by the capacity of their data scientists.

This is changing now as data and research teams can do a lot more with a lot less by using LLMs across the entire data stack. Research teams can reduce their blind spots and data engineering bottlenecks, and ultimately get faster and better investment insights.

But even with all the AI advancements, the core needs of data buyers for efficient dataset evaluation, trusted data quality, and transparency remain the same.